For a tutorial on installing and using the Learning Trees Bundle, as well as other HPCC Machine Learning methods, see: Using HPCC Machine Learning. For an introduction to Machine Learning, see: Machine Learning Demystified. This article assumes a general knowledge of Machine Learning and its terminology.

#Random forest pros and cons free#

Additionally, we provide an overview of HPCC’s free LearningTrees Bundle that provides efficient, parallelized implementations of these methods.

We explore the mechanisms and the science behind the various Decision Tree methods. In this article, we take a dive into the world of Decision Tree Models, which we refer to as Learning Trees.

#Random forest pros and cons full#

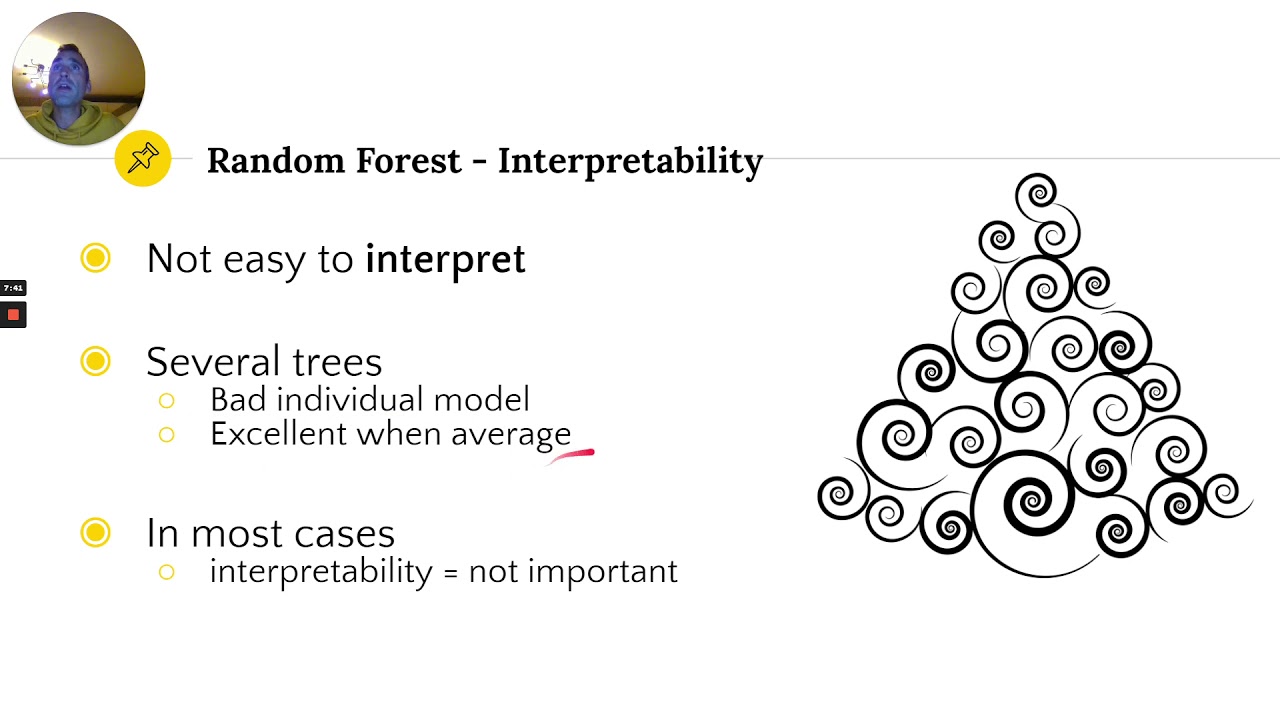

They offer feature importance, but unlike linear regression, they do not offer full visibility into the coefficients.

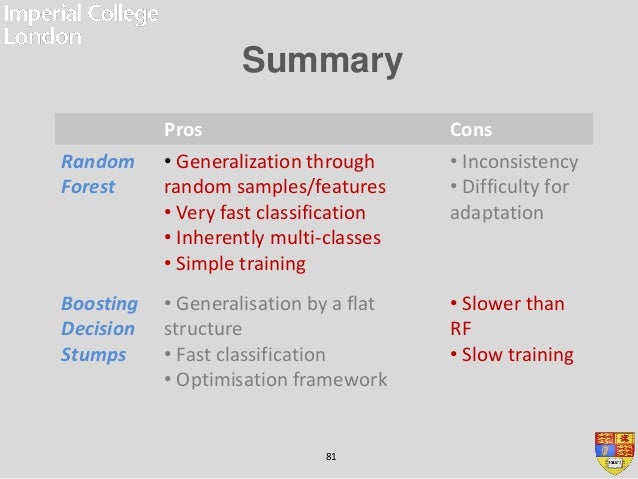

The following are the Random Forest algorithm’s drawbacks:

Both linear and non-linear relationships are well-handled by random forests.The variables are binned to achieve this. Outliers do not significantly impact random forests.The Random Forest algorithm maintains good accuracy despite a significant amount of missing data.Even after providing data without scaling, it still maintains good accuracy. The random forest algorithm does not require data scaling.Random forests are extremely adaptable and highly accurate.This makes it a great model for working with data with many different features. It constructs each decision tree using a random set of features to accomplish this. Random forests automatically create uncorrelated decision trees and carry out feature selection.The random forest has a lower variance than a single decision tree.In most cases, no scaling or transformation of the variables is required. Both categorical and numerical data can be used with it. Random forests perform better for a wide range of data items than a single decision tree.By averaging or combining the outputs of various decision trees, random forests solve the overfitting issue and can be used for classification and regression tasks.The Random Forest algorithm has the following benefits: Finally, choose the prediction result that received the most votes as the final prediction result. Voting will be conducted for each predicted outcome in the following step. The prediction outcome from each decision tree will then be obtained. A decision tree will then be built by this algorithm for each sample. With the help of the subsequent steps, we can comprehend how the Random Forest algorithm functions: Start by choosing random samples from a pre-existing dataset. Because the ensemble method averages the results, it reduces over-fitting and is superior to a single decision tree.

0 kommentar(er)

0 kommentar(er)